|

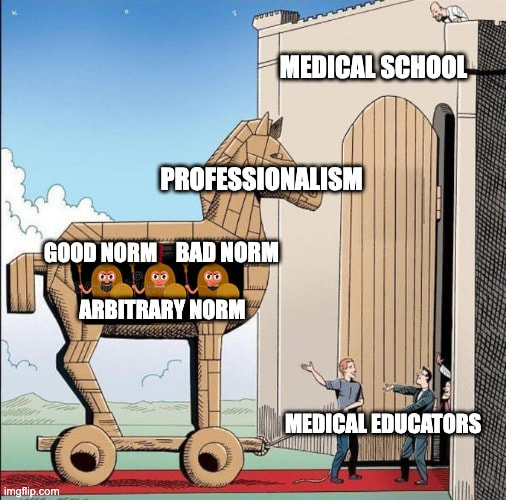

Medical students are socialized to feel like we don't understand clinical practice well enough to have strong opinions about it. This happens despite the wisdom, thoughtfulness, and good intentions of medical educators; it happens because of basic features of medical education. First, the structure of medical school makes medical students feel younger—and correspondingly less competent, reasonable, and mature—than we are. Two-thirds of medical students take gap years between college and medical school, so many of us go from living in apartments to living in dorms; from working full-time jobs to attending mandatory 8am lectures; from freely scheduling doctors' appointments to being unable to make plans because we haven't received our schedules. I once found myself moving into a new apartment at 11pm because my lease ended that day, but I had been denied permission to leave class early. Professionalism assessments also play a role. "Professionalism" is not well defined, and as a result, "behaving professionally" has more or less come to mean "adhering to the norms of the medical profession," or even just "adhering to the norms the people evaluating you have decided to enforce." These include ethical norms, behavioral norms, etiquette norms, and any other norms you might imagine. For instance, a few months into medical school, my class received this email: "Moving the bedframes violates the lease agreement that you signed upon entering [your dorm]. You may have heard a rumor from more senior students that this is an acceptable practice. Unfortunately, it is not... If you have moved or accepted a bed, and we do not hear from you it will be seen as a professionalism issue and be referred to the appropriate body." (emphasis added) When someone tells you early in your training that something is a professionalism issue, your reaction may be "hm, I don't really see why moving beds is an issue that's relevant to the medical profession, but maybe I'll come to understand." First-year medical students are inclined to be deferential because we recognize how little we know about the medical profession. We do not understand the logic behind, for instance, rounds, patient presentations, and 28-hour shifts. Many of these norms eventually start to make sense. I've gone from wondering why preceptors harped so emphatically on being able to describe a patient in a single sentence to appreciating the efficiency and clarity of a perfect one-liner. But plenty of norms in medicine are just bad. Some practices are manifestations of paternalism (e.g., answering patients' questions in a vague, non-committal way), racism or sexism (e.g., undertreating Black patients' pain), antiquated traditions (e.g., wearing coats that may transmit disease), or the brokenness of the US health care system (e.g., not telling patients the cost of their care). The bad practices are often subtle, and even when they aren't, it can take a long time to realize they aren't justifiable. It took me seeing multiple women faint from pain during gynecologic procedures before I felt confident enough to tentatively suggest that we do things differently. My default stance was "there must be some reason they're doing things this way," and it required an overwhelming amount of evidence to change my mind.

Other professions undoubtedly have a similar problem: new professionals in any field may not feel that they can question established professional norms until they've been around long enough that the norms have become, well, normalized. As a result, it may often be outsiders who push for change. For instance, it was initially parents—not teachers—who lobbied for the abolition of corporal punishment in schools. Similarly, advocacy groups have created helplines to support patients appealing surprise medical bills, even as hospitals have illegally kept prices opaque. The challenge for actors outside of medicine, though, is that medicine is a complicated and technical field, and it is hard to challenge norms that you do not fully understand. Before I was a medical student, I had a doctor who repeatedly "examined" my injured ankle over my shoe. I didn't realize until my first year of medical school that you can't reliably examine an ankle this way. In some ways, medical students are uniquely well-positioned to form opinions about which practices are good and bad. This is because we are both insiders and outsiders. We have some understanding of how medicine works, but haven't yet internalized all of its norms. We're expected to learn, so can ask "Why is this done that way?" and evaluate the rationale. And we rotate through different specialties, so can compare across them, assessing which practices are pervasive and which are specific to a given context. Our insider/outsider status could be both a weakness and a strength: we may not know medicine, but our time spent working outside of medicine has left us with other knowledge; we may not understand clinical practice yet, but we haven't been numbed to it, either. One of the hardest things medical students have to do is remain open and humble enough to recognize that many practices will one day make sense, while remaining clear-eyed and critical about those that won't. But the concept of professionalism blurs our vision. It gives us a strong incentive not to form our own opinions because we are being graded on how well we emulate norms. Assuming there are good reasons for these norms resolves cognitive dissonance, while asking hard questions about them risks calling other doctors' professionalism into question. Thus, professionalism makes us less likely to trust our opinions about the behaviors we witness in the following way. First, professionalism is defined so broadly that norms only weakly tied to medicine fall under its purview. Second, we know we do not understand the rationales underlying many professional norms, so are inclined to defer to more senior clinicians about them. In combination, we are set up to place little stock in the opinions we form about the things we observe in clinical settings, including those we're well-positioned to form opinions about. In the absence of criticism and pushback, entrenched norms are liable to remain entrenched.

0 Comments

This piece was originally published on Bill of Health, the blog of Petrie-Flom Center at Harvard Law School.

There has been too little evaluation of ethics courses in medical education in part because there is not consensus on what these courses should be trying to achieve. Recently, I argued that medical school ethics courses should help trainees to make more ethical decisions. I also reviewed evidence suggesting that we do not know whether these courses improve decision making in clinical practice. Here, I consider ways to assess the impact of ethics education on real-world decision making and the implications these assessments might have for ethics education. The Association of American Medical Colleges (AAMC) includes “adherence to ethical principles” among the “clinical activities that residents are expected to perform on day one of residency.” Notably, the AAMC does not say graduates should merely understand ethical principles; rather, they should be able to abide by them. This means that if ethics classes impart knowledge and skills — say, an understanding of ethical principles or improved moral reasoning — but don’t prepare trainees to behave ethically in practice, they have failed to accomplish their overriding objective. Indeed, a 2022 review on the impact of ethics education concludes that there is a “moral obligation” to show that ethics curricula affect clinical practice. Unfortunately, we have little sense of whether ethics courses improve physicians’ ethical decision-making in practice. Ideally, assessments of ethics curricula should focus on outcomes that are clinically relevant, ethically important, and measurable. Identifying such outcomes is hard, primarily because many of the goals of ethics curricula cannot be easily measured. For instance, ethics curricula may improve ethical decision-making by increasing clinicians’ awareness of the ethical issues they encounter, enabling them to either directly address these dilemmas or seek help. Unfortunately, this skill cannot be readily assessed in clinical settings. But other real-world outcomes are more measurable. Consider the following example: Physicians regularly make decisions about which patients have decision-making capacity (“capacity”). This determination matters both clinically and ethically, as it establishes whether patients can make medical decisions for themselves. (Notably, capacity is not a binary: patients can retain capacity to make some decisions but not others, or can retain capacity to make decisions with the support of a surrogate.) Incorrectly determining that a patient has or lacks capacity can strip them of fundamental rights or put them at risk of receiving care they do not want. It is thus important that clinicians correctly determine which patients possess capacity and which do not. However, although a large percentage of hospitalized patients lack capacity, physicians often do not feel confident in their ability to assess capacity, fail to recognize that most patients who do not have capacity lack it, and often disagree on which patients have capacity. Finally, although capacity is challenging to assess, there are relatively clear and widely agreed upon criteria for assessing it, and evaluation tools with high interrater reliability. Given this, it would be both possible and worthwhile to determine whether medical trainees’ ability to assess capacity in clinical settings is enhanced by ethics education. Here are two potential approaches to evaluating this: first, medical students might perform observed capacity assessments on their psychiatry rotations, just as they perform observed neurological exams on their neurology rotations. Students’ capacity assessments could be compared to a “gold standard,” or the assessments of physicians who have substantial training and experience in evaluating capacity using structured interviewing tools. Second, residents who consult psychiatry for capacity assessments could be asked to first determine whether they think a patient has capacity and why. This determination could be compared with the psychiatrist’s subsequent assessment. Programs could then randomize trainees to ethics training — or to a given type of ethics training — to determine the effect of ethics education on the quality and accuracy of trainees’ capacity assessments. Of course, ethics curricula should do much more than make trainees good at assessing capacity. But measuring one clinically and ethically significant endpoint could provide insight into other aspects of ethics education in two important ways. First, if researchers were to determine that trainees do a poor job of assessing capacity because they have too little time, or cannot remember the right questions to ask, or fail to check capacity in the first place, this would point to different solutions — some of which education could help with, and others of which it likely would not. Second, if researchers were to determine that trainees generally do a poor job of assessing capacity because of a given barrier, this could have implications for other kinds of ethical decisions. For instance, if researchers were to find that trainees fail to perform thorough capacity assessments primarily because of time constraints, other ethical decisions would likely be impacted as well. Moreover, this insight could be used to improve ethics curricula. After all, ethics classes should teach clinicians how to respond to the challenges they most often face. Not all (or perhaps even most) aspects of clinicians’ ethical decision-making are amenable to these kinds of evaluations in clinical settings, meaning other types of evaluations will play an important role as well. But many routine practices — assessing capacity, acquiring informed consent, advance care planning, and allocating resources, for instance — are. And given the importance of these endpoints, it is worth determining whether ethics education improves clinicians’ decision making across these domains. This piece was originally published on Bill of Health, the blog of Petrie-Flom Center at Harvard Law School.

Medical students spend a lot of time learning about conditions they will likely never treat. This weak relationship between what students are taught and what they will treat has negative implications for patient care. Recently, I looked into discrepancies between U.S. disease burden in 2016 and how often conditions are mentioned in the 2020 edition of First Aid for the USMLE Step 1, an 832 page book sometimes referred to as the medical student’s bible. The content of First Aid provides insight into the material emphasized on Step 1 — the first licensing exam medical students take, and one that is famous for testing doctors on Googleable minutia. This test shapes medical curricula and students’ independent studying efforts — before Step 1 became pass-fail, students would typically study for it for 70 hours a week for seven weeks, in addition to all the time they spent studying before this dedicated period. My review identified broad discrepancies between disease burden and the relative frequency with which conditions were mentioned in First Aid. For example, pheochromocytoma, a rare tumor that occurs in about one out of every 150,000 people per year — is mentioned 16 times in First Aid. By contrast, low back pain — the fifth leading cause of disability-adjusted life years, or DALYs, in the U.S., and a condition that has affected one in four Americans in the last three months — is mentioned only nine times. (Disease burden is commonly measured in DALYs, which combine morbidity and mortality into one metric. The leading causes of DALYs in the U.S. include major contributors to mortality, like ischemic heart disease and lung cancer, as well as major causes of morbidity, like low back pain.) Similarly, neck pain, which is the eleventh leading cause of DALYs, is mentioned just twice. Both neck and back pain are also often mentioned as symptoms of other conditions (e.g., multiple sclerosis and prostatitis), rather than as issues in and of themselves. Opioid use disorder, the seventh leading cause of DALYs in 2016 and a condition that killed more than 75,000 Americans last year, is mentioned only three times. Motor vehicle accidents are mentioned only four times, despite being the fifteenth leading cause of DALYs. There are some good reasons why Step 1 content is not closely tied to disease burden. The purpose of the exam is to assess students’ understanding and application of basic science principles to clinical practice. This means that several public health problems that cause significant disease burden — like motor vehicle accidents or gun violence — are barely tested. But it is not clear Step 2, an exam meant to “emphasize health promotion and disease prevention,” does much better. Indeed, in First Aid for the USMLE Step 2, back pain again is mentioned fewer times than pheochromocytoma. Similarly, despite dietary risks posing the greatest health threat to Americans (including smoking), First Aid for the USMLE Step 2 says next to nothing about how to reduce these risks. More broadly, there may also be good reasons why medical curricula should not perfectly align with disease burden. First, more time should be devoted to topics that are challenging to understand or that teach broader physiologic lessons. Just as researchers can gain insights about common diseases by studying rare ones, students can learn broader lessons by studying diseases that cause relatively little disease burden. Second, after students begin their clinical training, their educations will be more closely tied to disease burden. When completing a primary care rotation, students will meet plenty of patients with back and neck pain. But the reasons some diseases are emphasized and taught about more than others often may be indefensible. Medical curricula seem to be greatly influenced by how well understood different conditions are, meaning curricula can wind up reflecting research funding disparities. For instance, although eating disorders cause substantial morbidity and mortality, research into them has been underfunded. As a result, no highly effective treatments targeting anorexia or bulimia nervosa have emerged, and remission rates are relatively low. Medical schools may not want to emphasize the limitations of medicine or devote resources to teaching about conditions that are multifactorial and resist neat packaging, meaning these disorders are often barely mentioned. But, although eating disorders are not well understood, thousands of papers have been written about them, meaning devoting a few hours to teaching medical students about them would still barely scratch the surface. And even when a condition is understudied or not well understood, it is worth explaining why. For instance, if heart failure with reduced ejection fraction is discussed more than heart failure with preserved ejection fraction, students may wrongly conclude this has to do with the relative seriousness of these conditions, rather than with the inherent challenge of conducting clinical trials with the latter population (because their condition is less amenable to objective inclusion criteria). Other reasons for curricular disparities may be even more insidious: for instance, the lack of attention to certain diseases may reflect the medical community’s perceived importance of these conditions, or whether they tend to affect more empowered or marginalized populations. The weak link between medical training and disease burden matters: if medical students are not taught about certain conditions, they will be less equipped to treat these conditions. They may also be less inclined to specialize in treating them or to conduct research on them. Thus, although students will encounter patients with back pain or who face dietary risks, if they and the physicians supervising them have not been taught much about caring for these patients, these patients likely will not receive optimal treatment. And indeed, there is substantial evidence that physicians feel poorly prepared to counsel patients on nutrition, despite this being one of the most common topics patients inquire about. If the lack of curricular attention reflects research and health disparities, failing to emphasize certain conditions may also compound these disparities. Addressing this problem requires understanding it. Researchers could start by assessing the link between disease burden and Step exam questions, curricular time, and other resources medical students rely on (like the UWorld Step exam question banks). Organizations that influence medical curricula — like the Association of American Medical Colleges and the Liaison Committee on Medical Education—should do the same. Medical schools should also incorporate outside resources to cover topics their curricula do not explore in depth, as several medical schools have done with nutrition education. But continuing to ignore the relationship between disease burden and curricular time does a disservice to medical students and to the patients they will one day care for. This piece was originally published on Bill of Health, the blog of Petrie-Flom Center at Harvard Law School.

I recently argued that we need to evaluate medical school ethics curricula. Here, I explore how ethics courses became a key component of medical education and what we do know about them. Although ethics had been a recognized component of medical practice since Hippocrates’ time, ethics education is a more recent innovation. In the 1970s, the medical community was shaken by several high-profile lawsuits alleging unethical behavior by physicians. As medical care advanced — and categories like “brain death” emerged — doctors found themselves facing challenging new dilemmas and old ones more often. In response to this, in 1977, The Johns Hopkins University School of Medicine became the first medical school to incorporate ethics education into its curriculum. Throughout the 1980s and 1990s, medical schools increasingly began to incorporate ethics education into their curricula. By 2002, approximately 79 percent of U.S. medical schools offered a formal ethics course. Today, the Association of American Medical Colleges (AAMC) includes “adherence to ethical principles” among the competencies required of medical school graduates. As a result, all U.S. medical schools — and many medical schools around the world — require ethics training. There is some consensus on the content ethics courses should cover. The AAMC requires medical school graduates to “demonstrate a commitment to ethical principles pertaining to provision or withholding of care, confidentiality, informed consent.” Correspondingly, most medical school ethics courses review issues related to consent, end-of-life care, and confidentiality. But beyond this, the scope of these courses varies immensely (in part because many combine teaching in ethics and professionalism, and there is little consensus on what “professionalism” means). The format and design of medical school ethics courses also varies. A wide array of pedagogical approaches are employed: most rely on some combination of lectures, case-based learning, and small group discussions. But others employ readings, debates, or simulations with standardized patients. These courses also receive differing degrees of emphasis within medical curricula, with some schools spending less than a dozen hours on ethics education and others spending hundreds. (Notably, much of the research on the state of ethics education in U.S. medical schools is nearly twenty years old, though there is little reason to suspect that ethics education has converged during that time, given that medical curricula have in many ways become more diverse.) Finally, what can seem like consensus in approaches to ethics education can mask underlying differences. For instance, although many medical schools describe their ethics courses as “integrated,” schools mean different things by this (e.g., in some cases “integrated” means “interdisciplinary,” and in other cases it means “incorporated into other parts of the curriculum”). A study from this year reviewed evidence on interventions aimed at improving ethical decision-making in clinical practice. The authors identified eight studies of medical students. Of these, five used written tools to evaluate students’ ethical reasoning and decision-making, while three assessed students’ interactions with standardized patients or used objective structured clinical examinations (OSCEs). Three of these eight studies assessed U.S. students, the most recent of which was published in 1998. These studies found mixed results. One study found that an ethics course led recipients to engage in more thorough — but not necessarily better — reasoning, while another found that evaluators disagreed so often that it was nearly impossible to achieve consensus about students’ performances. The authors of a 2017 review assessing the effectiveness of ethics education note that it is hard to draw conclusions from the existing data, describing the studies as “vastly heterogeneous,” and bearing “a definite lack of consistency in teaching methods and curriculum,” The authors conclude, “With such an array, the true effectiveness of these methods of ethics teaching cannot currently be well assessed especially with a lack of replication studies.” The literature on ethics education thus has several gaps. First, many of the studies assessing ethics education in the U.S. are decades old. This matters because medical education has changed significantly during the 21st century. (For instance, many medical schools have substantially restructured their curricula and many students do not regularly attend class in person.) These changes may have implications for the efficacy of ethics curricula. Second, there are very few head-to-head comparisons of ethics education interventions. This is notable because ethics curricula are diverse. Finally, and most importantly, there is almost no evidence that these curricula lead to better decision-making in clinical settings — where it matters. This piece was originally published on Bill of Health, the blog of Petrie-Flom Center at Harvard Law School.

Health professions students are often required to complete training in ethics. But these curricula vary immensely in terms of their stated objectives, time devoted to them, when during training students complete them, who teaches them, content covered, how students are assessed, and instruction model used. Evaluating these curricula on a common set of standards could help make them more effective. In general, it is good to evaluate curricula. But there are several reasons to think it may be particularly important to evaluate ethics curricula. The first is that these curricula are incredibly diverse, with one professor noting that the approximately 140 medical schools that offer ethics training do so “in just about 140 different ways.” This suggests there is no consensus on the best way to teach ethics to health professions students. The second is that time in these curricula is often quite limited and costly, so it is important to make these curricula efficient. Third, when these curricula do work, it would be helpful to identify exactly how and why they work, as this could have broader implications for applied ethics training. Finally, it is possible that some ethics curricula simply don’t work very well. In order to conclude ethics curricula work, at least two things would have to be true: first, students would have to make ethically suboptimal decisions without these curricula, and second, these curricula would have to cause students to make more ethical decisions. But it’s not obvious both these criteria are satisfied. After all, ethics training is different from other kinds of training health professions students receive. Because most students come in with no background in managing cardiovascular disease, effectively teaching students how to do this will almost certainly lead them to provide better care. But students do enter training with ideas about how to approach ethical issues. If some students’ approaches are reasonable, these students may not benefit much from further training (and indeed, bad training could lead them to make worse decisions). Additionally, multiple studies have found that even professional ethicists do not behave more morally than non-ethicists. If a deep understanding of ethics does not translate into more ethical behavior, providing a few weeks of ethics training to health professions students may not lead them to make more ethical decisions in practice — a primary goal of these curricula. One challenge in evaluating ethics curricula is that people often disagree on their purpose. For instance, some have emphasized “[improving] students’ moral reasoning about value issues regardless of what their particular set of moral values happens to be.” Others have focused on a variety of goals, from increasing students’ awareness of ethical issues, to learning fundamental concepts in bioethics, to instilling certain virtues. Many of these objectives would be challenging to evaluate: for instance, how does one assess whether an ethics curriculum has increased a student’s “commitment to clinical competence and lifelong education”? And if the goals of ethics curricula differ across institutions, would it even be possible to develop a standardized assessment tool that administrators across institutions would be willing to use? These are undoubtedly challenges. But educators likely would agree upon at least one straightforward and assessable objective: these curricula should cause health professions students to make more ethical decisions more of the time. This, too, may seem like an impossible standard to assess: after all, if people agreed on the “more ethical” answers to ethical dilemmas, would these classes need to exist in the first place? But while medical ethicists disagree in certain cases about what these “more ethical” decisions are, in most common cases, there is consensus. For instance, the overwhelming majority of medical ethicists agree that, in general, capacitated patients should be allowed to make decisions about what care they want, people should be told about the major risks and benefits of medical procedures, patients should not be denied care because of past unrelated behavior, resources should not be allocated in ways that primarily benefit advantaged patients, and so on. In other words, there is consensus on how clinicians should resolve many of the issues they will regularly encounter, and trainees’ understanding of this consensus can be assessed. (Of course, clinicians also may encounter niche or particularly challenging cases over their careers, but building and evaluating ethics curricula on the basis of these rare cases would be akin to building an introductory class on cardiac physiology around rare congenital anomalies.) Ideally, ethics curricula could be evaluated via randomized controlled trials, but it would be challenging to randomize some students to take a course and others not to. However, at some schools, students could be randomized to completing ethics training at different times of year, and assessments could be done before all students had completed the training and after some students had completed it. There are also questions about how to assess whether students will make more ethical decisions in practice. More schools could consider using simulations of common ethical scenarios, where they might ask students to perform capacity assessments or seek informed consent for procedures. But simulations are expensive and time-consuming, so some schools could start by simply conducting a standard pre- and post-course survey assessing how students plan to respond to ethical situations they are likely to face. Of course, saying you will do something on a survey does not necessarily mean you will do that thing in practice, but this could at least give programs a general sense of whether their ethics curricula work and how they compare to other schools’. Most health professions programs provide training in ethics. But simply providing this training does not ensure it will lead students to make more ethical decisions in practice. Thus, health professions programs across schools should evaluate their curricula using a common set of standards. This piece was originally published on Bill of Health, the blog of Petrie-Flom Center at Harvard Law School.

Today, the average medical student graduates with more than $215,000 of debt from medical school alone. The root cause of this problem — rising medical school tuitions — can and must be addressed. In real dollars, a medical degree costs 750 percent more today than it did seventy years ago, and more than twice as much as it did in 1992. These rising costs are closely linked to rising debt, which has more than quadrupled since 1978 after accounting for inflation. Physicians with more debt are more likely to experience to burnout, substance use disorders, and worse mental health. And, as the cost of medical education has risen, the share of medical students hailing from low-income backgrounds has fallen precipitously, compounding inequities in medical education. These changes are bad for patients, who benefit from having doctors who hail from diverse backgrounds and who aren’t burned out. But the high cost of medical education is bad for patients in other ways too, as physicians who graduate with more debt are more likely to pursue lucrative specialties, rather than lower-paying but badly needed ones, such as primary care. Doctors with more debt are also less likely to practice in underserved areas. The high cost of medical education also is bad for the public. A substantial portion of medical school loans are financed by the government, and nearly 40 percent of medical students plan to pursue programs like Public Service Loan Forgiveness (PSLF). When students succeed at having their loans forgiven, taxpayers wind up footing a portion of the bill, and the higher these loans are, the larger the bill is. The public benefits from programs like PSLF to the extent that such programs incentivize physicians to pursue more socially valuable careers. But these programs don’t address the underlying cause of rising debt: rising medical school tuitions. Despite the detrimental effects of the rising cost of medical education, little has been done to address the issue. There are several reasons for this. First, most physicians make a lot of money. Policymakers may correspondingly view medical students’ debt — which most students can repay — as a relatively minor problem, particularly when compared to other students’ debt. The American Association of Medical Colleges (AAMC) employed similar logic last year when it advised prospective medical students to not “let debt stop your dreams,” writing: “Despite the expense, medical school remains an outstanding investment. The average salary for physicians is around $313,000, up from roughly $210,000 in 2011.” Although this guidance may make medical students feel better, the AAMC’s guidance should hardly reassure the public, as to some extent, doctors’ salaries contribute to high health care costs. Another challenge to reducing the cost of medical education is the lack of transparency about how much it costs to educate medical students. Policymakers tend to defer to medical experts about issues related to medicine, meaning medical schools and medical organizations are largely responsible for regulating medical training. Unsurprisingly, medical schools — the institutions that set tuitions and benefit from tuition increases — have taken relatively few steps to justify or contain rising costs. Perhaps more surprisingly, the organization responsible for accrediting medical schools, the Liaison Committee on Medical Education (LCME), requires medical schools to provide students with “with effective financial aid and debt management counseling,” but does not require medical schools to limit tuition increases or to demonstrate that tuitions reflect the cost of training students. This is worrisome, as some scholars have noted that the price students pay may not reflect the cost of educating them. After all, medical schools have tremendous power to set prices, as most prospective students will borrow as much money as they need to in order to attend: college students spend years preparing to apply to medical school, most applicants are rejected, and many earn admission to only one school. And although some medical school faculty claim that medical schools lose money on medical students, experts dispute this, with one dean suggesting that it costs far less to educate students than students presently pay, and the tuition students pay instead “supports unproductive faculty.” Medical schools should take several steps to reduce students’ debt burdens. First, schools could reduce tuitions by reducing training costs. Schools could do so by relying more on external curricular resources, rather than generating all resources internally. More than a third of medical students already “almost never” attend lectures, instead favoring resources that are orders of magnitude cheaper than medical school tuitions. The fact that students opt to use these resources — often instead of attending classes they paid tens of thousands of dollars for — suggests students find these resources to be effective teaching tools. Schools should thus replace more expensive and inefficient internal resources with outside ones. Schools could also reduce the cost of a medical degree by decreasing the time it takes to earn one. More schools could give students the option of pursuing a three-year medical degree, as many medical students do very little during their fourth year. A second possibility would be to shift more of the medical school curriculum into students’ undergraduate educations. For instance, instead of requiring pre-medical students to take two semesters of physics, medical schools could instead require students to take one semester of physics and one semester of physiology, as some schools have done. Finally, medical schools could simply reduce the amount they charge students, as the medical schools affiliated with NYU, Cornell, and Columbia have done. Because tuition represents only a tiny fraction of medical schools’ revenues — as one dean put it, a mere “rounding error” — reducing the cost of attendance would only marginally affect schools’ bottom lines. Rather than eliminating tuition across the board, medical schools should focus on reducing the tuitions of students who commit to doing lower paying but valuable specialties or working in underserved areas. Unfortunately, most medical schools have demonstrated little willingness to take these steps. It is therefore likely that outside actors, like the LCME and the government, will need to intervene to improve financial transparency, ensure tuitions match the cost of training, and contain rising debt. This piece was originally published on Bill of Health, the blog of Petrie-Flom Center at Harvard Law School.

In my junior year of college, my pre-medical advisor instructed me to take time off after graduating and before applying to medical school. I was caught off guard. At 21, it had already occurred to me that completing four years of medical school, at least three years of residency, several more years of fellowship, and a PhD, would impact my ability to start a family. I was wary of letting my training expand even further, but this worry felt so vague and distant that I feared expressing it would signal a lack of commitment to my career. I now see that this worry was well-founded: the length of medical training unnecessarily compromises trainees’ ability to balance their careers with starting families. In the United States, medical training has progressively lengthened. At the beginning of the 20th century, medical schools increased their standards for admission, requiring students to take premedical courses prior to matriculating, either as undergraduates or during post-baccalaureate years. Around the same time, the length of medical school increased from two to four years. In recent decades, the percentage of physicians who pursue training after residency has increased in many fields. And, like me, a growing proportion of trainees are taking time off between college and medical school, pursuing dual degrees, or taking non-degree research years, further prolonging training. The expansion of medical training is understandable. We know far more about medicine than we did a hundred years ago, and there is correspondingly more to teach. The increased structure and regulation of medical training have made medical training safer for patients and ensured a standard of competency for physicians. Getting into medical school and residency have also become more competitive, meaning many trainees feel they must spend additional time bolstering their resumes. However, medical training is now longer than it needs to be. Many medical students do very little during their fourth year of medical school. Residency programs also require trainees to serve for a set number of years, rather than until they have mastered the skills their fields require, inflating training times. And many medical schools and residency programs require trainees to spend time conducting research, whether or not they are interested in academic careers. While many trainees appreciate these opportunities, they should not be compulsory. There are many arguments for shortening medical training. In other parts of the world, trainees complete six-year medical degrees, compared to the eight years required of most trainees in the U.S. (four years of college and four years of medical school). The length of medical training increases physician debt and healthcare costs. In addition, it decreases the supply of fully trained physicians, a serious problem in a country facing physician shortages. Since burnout is prevalent among trainees, shortening training could also mitigate burnout. And critically, the length of medical training makes it challenging for physicians to start families. Because medical trainees work long hours, have physically demanding jobs, and are burdened by substantial debt, they are sometimes advised or pressured to wait until they complete their training before having children. But high rates of infertility and pregnancy complications among female physicians who defer childbearing suggest this is a treacherous path. Starting a family during training hardly feels like a safer option. In my first year of medical school, I attended a panel of surgical subspecialty residents. I asked whether their residencies would be compatible with starting a family. The four men looked at each other before passing the microphone to the sole woman resident, who told us she could not imagine having a child during residency, as she didn’t even have time to do laundry, so instead ordered new socks each week. In my second year of medical school, a physician spoke to us about having a child during residency. I asked how she and her husband afforded full-time child care on resident salaries. She confided that they had maxed out their credit cards and added this debt to their medical school debt to pay for daycare. There have been encouraging anecdotes, too, but these have often involved healthy parents relocating, significant financial resources, or partners with less intense careers. Admittedly, shortening medical training would not be a magic bullet. Physicians who have completed their training and have children still face myriad challenges, and these challenges disproportionately affect women. Maternal discrimination is common, and compared to men, women physicians with children spend more than eight hours per week on parenting and domestic work and correspondingly spend seven fewer hours on their paid work. Nearly 40 percent of women physicians leave full-time practice within six years of completing residency, with most citing family as the reason why. However, shortening medical training would enable more trainees to defer childbearing until the completion of their training. This would help in several ways. For instance, while most trainees are required to work certain rotations (e.g., night shifts), attending physicians often have more flexibility in choosing when and how much they work. In addition, attending physicians earn much more money than trainees, expanding the child care options they can afford. In recent years, many changes have been made to support trainees with children, from expanding access to lactation rooms, to increasing parental leave, to creating more child care facilities at hospitals. But long training times remain a persistent and reduceable barrier. To address this, medical schools could only require students to take highly relevant coursework, reducing the number of applicants who would need to complete additional coursework after college. Medical schools could also increase the number of three-year medical degree pathways and make research requirements optional. Residency and fellowship programs could create more opportunities for integrated residency and fellowship training and could similarly make research time optional. It will be challenging to create efficient paths that provide excellent training without creating impossibly grueling schedules. But this is a challenge that must be confronted: physicians should be able to balance starting a family with pursuing their careers, and streamlining medical training will facilitate this. Haley Sullivan, Emma Pierson, Niko Adamstein, and Sam Doernberg gave helpful feedback on this post.

Many medical journals will publish ~1,000-word opinion pieces written by medical students. There is a lot of luck involved in getting these published, but here are tips others have given me, as well as lessons I have learned on how to do this:

|

Archives

December 2023

Categories

All

Posts

All

|

RSS Feed

RSS Feed