|

There is a new editorial in Nature arguing that discussions pertaining to risks posed by AI—including biased decision-making, the elimination of jobs, autocratic states’ use of facial recognition, and algorithmic bias in how social goods are distributed—are “being starved of oxygen” by debates about AI existential risk. Specifically, the authors assert that: “like a magician’s sleight of hand, [attention to AI x-risk] draws attention away from the real issue.” The authors encourage us to "stop talking about tomorrow's AI doomsday when AI poses risks today."

People have been making this claim a lot, and while different versions have been put forward, the general take seems to be something like:

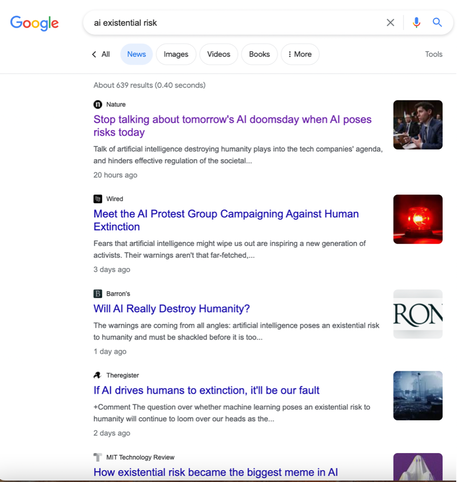

Versions of this claim rely on the assumption that there is a specific kind of relationship between discussions about AI x-risks and other AI risks, namely: A parasitic relationship: Discussing AI x-risk causes us to discuss other AI risks less. However, the relationship could instead be: A mutual relationship: Discussing AI x-risk causes us to discuss other AI risks more. Or: A commensal relationship: Discussing AI x-risk doesn't cause us to discuss other AI risks less or more. It’s not clear that we generally starve issues of oxygen by discussing related ones: for instance, the Nature editorial does not suggest that discussions about privacy risks come at the expense of discussions about misinformation risks. And there are reasons to think there could be a mutual relationship between discussions about different kinds of AI risks. Some of the risks posed by AI that are discussed in the Nature editorial may lie on the pathway to AI x-risk. Talking about AI x-risk may help direct attention and resources to mitigating present risks, and addressing certain present risks may help mitigate x-risks. Indeed, there are significant overlaps in the regulatory solutions to these issues: that is why Bruce and I suggest that we should discuss and address the existing and emerging risks posed by misaligned AI in tandem. There are also reasons to think that the relationship between discussions of AI x-risk and other risks could be commensal. Public discourse is messy and complicated. It isn’t a limited resource in the way that kidneys or taxpayer dollars are. Writing a blog post about AI x-risk doesn’t lead to one fewer blog posts about other AI risks. While discourse is partly comprised of limited resources (e.g., article space in a print newspaper), even here, there may not be direct tradeoffs between discussions of AI x-risk and discussions of other AI risks. Indeed, many of the articles I have read about AI x-risk also discuss other risks posed by AI. To assess this, I did a quick Google search for “AI existential risk” and reviewed the first five articles that came up under “News,” all of which mention risks that are not existential risks—those described above in Nature, algorithmic bias in Wired, weapons that are “not an existential threat” in Barron’s, mass unemployment in the Register, and discriminatory hiring and misinformation in MIT Tech Review (see screenshot below). So even if it is the case that newspapers are devoting more article space to AI x-risk, and this leaves less article space for everything else, it’s not clear that this leads to fewer words being written about other AI risks. My point is that we should not accept at face value the claim that discussing AI x-risk causes us to pay insufficient attention to other, extremely important risks related to AI. We should aim to resolve uncertainty about these (potential) tradeoffs with data—i.e., by tracking what articles have been published on each issue over time, looking at what articles are getting cited and shared, assessing what government bodies are debating and what policies they are issuing, and so on. Asserting that there are direct tradeoffs where there may not be risks undermining efforts to have productive discussions about the important, intersecting risks posed by AI.

0 Comments

Leave a Reply. |

Archives

December 2023

Categories

All

Posts

All

|

RSS Feed

RSS Feed